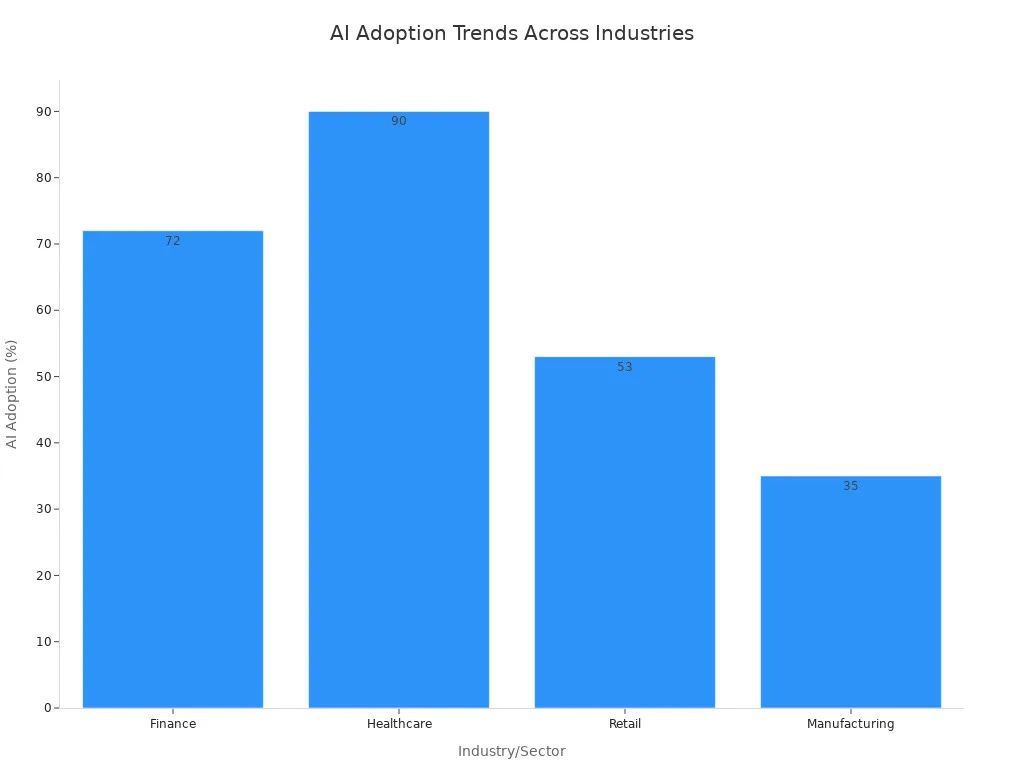

Microsoft plays a pivotal role in shaping global AI governance. As AI adoption continues to surge, with 78% of organizations utilizing AI in 2024, the need for robust regulatory frameworks becomes increasingly critical. The projected market value of AI, reaching USD 757.58 billion by 2025, further underscores the urgency of establishing comprehensive guidelines.

This blog delves into Microsoft’s initiatives, outlining their vision and anticipated contributions by 2025. Microsoft is committed to fostering the responsible development and deployment of AI, aiming to build public trust in the technology. Their efforts extend to establishing regulatory standards worldwide, actively contributing to the enhancement of global AI governance. Microsoft’s dedication to responsible AI is a cornerstone of their strategy.

Key Takeaways

Microsoft helps make rules for AI around the world. They want AI to be safe and fair for everyone.

Microsoft uses a special program called ‘responsible AI.’ This program guides how they build and use AI systems.

Microsoft has tools to check AI. These tools make sure AI is fair and works correctly.

Microsoft works with other groups and governments. They share ideas to create good AI rules everywhere.

Microsoft wants to balance new AI ideas with being responsible. This helps build trust in AI’s future.

Understanding AI Governance

Defining Core Principles

Good AI governance needs strong rules. These rules help make and use AI well. They make sure AI helps people. They also stop AI from causing problems. Important ai principles are:

Transparency: We must understand AI systems. People need to know how AI works. They need to know what information it uses. They need to know why it makes choices.

Accountability: People who make AI and use it must be responsible. They are responsible for what AI does. This builds trust. It protects people AI affects.

Fairness: AI systems should not be unfair. They should not treat people differently. They must give good results for everyone.

Ethics: People must think about what is right and wrong with AI. This includes privacy. It includes asking for permission. It includes stopping harm.

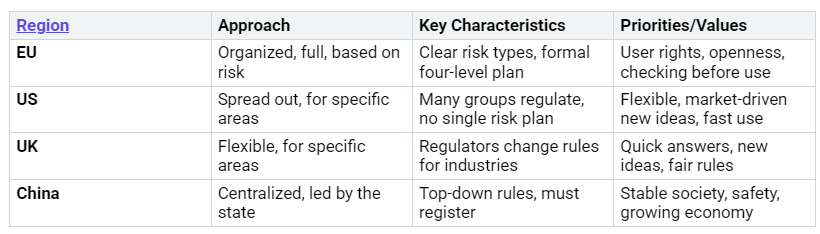

Global AI Policy Landscape

The world has different ways to manage AI. Different places have different plans. These plans show what they care about.

Some countries look at risks. They group AI by how much harm it could do. The EU’s AI Act is an example. It puts AI into four risk groups. Other countries use rules based on ideas. These focus on general good behavior. The UK’s “pro-innovation approach” focuses on good behavior. It does not have strict laws. Many countries mix these ideas. They look at risks and good behavior. The United States uses both. It uses old government rules. It also uses good behavior standards. This different global ai governance shows an ongoing effort. It tries to balance new ideas with being responsible. It shows how hard it is to make worldwide ai governance rules.

Microsoft in AI Governance: Approach and Impact

Microsoft helps shape AI governance. It uses a responsible AI program. This program guides how to make and use AI fairly. It sets up rules inside the company. It has special principles for responsible AI. Microsoft really cares about responsible AI. They use these practices in all AI work.

Internal AI Programs

Microsoft has many internal AI programs. These make sure responsible AI is developed. The Office of Responsible AI (ORA) sets clear rules. It has a step-by-step check system. This system makes sure responsible AI practices are used. A company-wide council helps. It makes sure Microsoft’s responsible AI Standard is followed. It also checks policies. There is also a group of responsible AI experts. They review how AI affects things. These experts help AI teams.

A key tool helps with this work. It is a required part of the responsible AI check. This tool records projects. It guides AI makers. It helps them check impacts. It makes work easier for experts. It puts responsible AI into software making. These programs help a lot. They make work better. They help new ideas grow. They promote good AI use. Microsoft also makes products better. They test them inside the company. They get feedback. They often use a ‘Customer Zero’ method. This makes employees’ work better. It uses new digital tools. The company’s AI Governance Framework has rules. It checks risks. It has security controls. It offers training. Microsoft says good choices are key for AI. They keep teaching about good AI practices. Being open and accountable is important. This builds trust. AI choices can be traced. People can step in. Special groups like the AI Ethics Committee exist. The Trustworthy Responsible AI Network (TRAIN) also helps. These groups make sure rules are followed. They follow global standards. Microsoft reduces unfairness. They use AI tools to watch for it. They fix it.

Core AI Principles

Microsoft’s responsible AI program has six main AI principles. These guide how AI systems are made. They also guide how they are used. These principles make sure AI helps people. They also reduce possible harm.

Fairness: AI systems must treat everyone fairly. They should not be biased. They should give good results for all.

Reliability and safety: AI systems must work well. They must be safe. They should do what they are supposed to. They should not cause harm.

Privacy and security: AI systems must be safe. They must respect privacy. They should keep data private. They should stop unwanted access.

Inclusiveness: AI systems must help everyone. They should include all people. They should serve many different groups.

Transparency: AI systems must be clear. Users should know how they make choices.

Accountability: People must be in charge of AI systems. This means people watch over AI. They are responsible for what it does.

These AI principles are the base. They show Microsoft’s promise for responsible AI.

Aether Committee’s Role

The Aether Committee is very important. It is part of Microsoft’s AI governance plan. This group advises Microsoft leaders. It talks about responsible AI problems. It also talks about chances. It started in 2016. It has groups that work on responsible AI tech. Researchers and engineers lead these groups. They are from Microsoft Research. They are also from other parts of the company.

The committee helps a lot with ethical AI governance. It does this by:

Reviewing AI Projects: It checks AI systems. It looks for ethical issues. It checks how they affect society. It checks if they follow Microsoft’s responsible AI principles.

Providing Guidance: It gives helpful advice. This makes sure AI systems are ethical.

Fostering Collaboration: It brings experts together. They work on the many challenges of AI governance.

The Aether Committee makes sure Microsoft’s AI ideas are good. It makes sure they help society.

Tools and Global Initiatives

Microsoft uses tools. It also uses plans. These help with its AI governance strategy. These tools help developers. They also help other groups. They make sure AI is made well. They make sure AI is used well.

Responsible AI Toolbox

Microsoft has a Responsible AI Toolbox. It has many open-source tools. These tools check AI models. They make them better. They check fairness. They check accuracy. They check how they explain things. The Responsible AI Dashboard is one tool. It brings everything together. It helps check responsible AI. It helps fix models. This dashboard finds errors. It fixes them. It also helps understand choices. It uses tools like Error Analysis. It uses Fairlearn. It uses InterpretML. It uses EconML. The Error Analysis dashboard finds model errors. It finds where the model does not work well. The Explanation dashboard helps understand predictions. InterpretML helps with this. The Fairness dashboard checks for unfairness. It uses fairness measures. Fairlearn helps with this. The Responsible AI Tracker is a special program. It helps manage tests. It tracks them. It compares them. This makes improvements faster. The Responsible AI Mitigations library helps improve models. It fixes errors in data. BackwardCompatibilityML trains models. It stops new errors. It shows pictures to compare models.

Open-Source Contributions

Microsoft helps open-source projects. These projects make responsible AI better. The Cognitive Toolkit is one such project. It is from Microsoft. It lets developers try new things. It helps them make AI tools better. The Responsible AI Toolbox is also open-source. It has tools like InterpretML. It has Error Analysis. It has Fairlearn. InterpretML is a project. It helps understand models. Error Analysis finds data parts. These parts cause model failures. Fairlearn is another project. It finds and fixes unfairness in AI. These open-source efforts show Microsoft‘s promise. They show it wants open responsible AI. It wants teamwork.

Global Partnerships

Microsoft works with governments. It works with them all over the world. These partnerships make ai governance plans. They help AI grow. They help many groups. Microsoft shares its knowledge. It shares its best ways of doing things. This helps shape rules. It helps shape policies. These partnerships make sure ai governance plans are good. They balance new ideas. They balance good behavior. They help everyone understand responsible AI ideas. This happens everywhere.

Influencing Standards and Regulations

Advocating Global Regulations

Microsoft helps shape global AI rules. It supports plans for safe AI. It also supports responsible AI. For example, Microsoft backs a special framework. It is called Conditional Access Framework for Zero Trust. This framework helps manage rules. It works within Microsoft Entra ID’s Zero Trust. It uses clear names. It uses layers for rules. This helps organize policies. Rules apply at many levels. They go from basic to extra rules.

Microsoft also joins many global efforts. It helped start the Cybersecurity Tech Accord. This group works together. It makes global security better. It stops attacks on people. It keeps users safe. Microsoft also puts money into cybersecurity. It works with UNIDIR. Microsoft helps the Roundtable for AI, Security, and Ethics (RAISE). RAISE wants to match international law. It wants responsible AI use. This is for national security. It gives ideas for good development. It helps lower risks. Microsoft helped start the CyberPeace Institute (CPI). This is a group that helps people. It makes digital safety stronger. It speaks out against bad AI use. Microsoft also started the Coalition to Reduce Cyber Risk (CRx2). This group works with governments. It promotes cybersecurity plans. These plans focus on risks. They are clear and flexible. Microsoft is also part of the Frontier Model Forum (FMF). This group makes frontier AI safer. It focuses on big risks. These are like CBRN threats. Microsoft’s full AI security plan has three parts. It stops bad AI use. It helps defenders use AI. It makes sure AI itself is safe. Research supports this plan. Many groups work together. These efforts show Microsoft’s role. It shows its promise for global standards.

Promoting Ethical AI Governance

Microsoft promotes good AI governance. It uses different plans. It works with OpenAI. They make AI governance plans. This helps shape responsible AI use. This is true all over the world. Microsoft shares ideas. These are about responsible AI. It also helps customers learn these ways. The company made an AI assurance program. This program helps customers. They can use their AI systems responsibly.

Microsoft shares its responsible AI Standard. This plan takes many years. It sets rules for making products. These rules are for responsible AI. It gives advice to groups. The company also has a website. It is called “Empowering responsible AI practices.” This site has rules and research. It has engineering information. It helps many people in groups. It promises to make AI safe. It promises to make it secure and trusted. Microsoft promotes a full plan for AI governance. This plan is more than just following rules. It wants good new ideas. It wants people to trust it. This plan has three main parts. These are data governance, AI governance, and regulatory governance. Data governance is key. It makes sure data is correct. This helps AI work well. This full plan helps groups. They can build trusted AI systems. It manages risks. It makes sure rules are followed.

Future AI Policy Shaping

Microsoft keeps shaping future AI rules. Its 2025 responsible AI transparency report shows its full AI governance plan. This report talks about automatic security. It talks about updated rules. The report shows better responsible AI tools. These tools measure more risks. They measure images and sounds. They also help agentic systems. Microsoft got ready for new rules. This includes the EU’s AI Act. It gives help to customers. This helps them follow rules. The company keeps a steady way to manage risks. This includes checks before use. It includes red teaming. It includes watching big AI releases. Microsoft also gives advice. This is for big AI uses. The Sensitive Uses and Emerging Technologies team helps with this. This is very important for generative AI. This is true for healthcare.

The 2025 responsible AI transparency report also uses research. It helps understand AI problems. Microsoft started the AI Frontiers Lab. This lab puts money into AI tech. This is for power and safety. Microsoft works with groups worldwide. It makes clear governance plans. It published a book on governance. It makes standards for AI testing better. Microsoft will focus on three things. These will help its promise. These include earning trust for AI use. They also include continuing responsible AI. Finally, they make sure efforts change. They change with the world.

Microsoft shared its Frontier Governance Framework. This was in February 2025. This plan has new rules. These are for making and using models. It needs papers for checks. These papers go to leaders. These leaders are in charge of Microsoft’s AI governance. It suggests safe use. This suggestion says the model is safe enough. It says the risks are low or medium. It also says the good parts are more than the risks. Leaders make the final choice. They approve use. The plan changes every six months. Microsoft’s Chief Responsible AI Officer checks changes. When right, changes become public. This is when they are used. The plan has early checks. These checks happen before training. They happen before use. Models are checked every six months. This checks progress in changes after training. Any model with new powers gets a deeper check. This finds its risk level. Public information is shared. This is about model powers and limits. This includes risk level. The plan follows Microsoft’s other rules. This includes internal checks. It includes board oversight. Microsoft workers can report worries. They use existing ways. They are protected from harm.

Projected Impact and Challenges by 2025

Standardizing AI Ethics

Making AI ethics the same everywhere is hard. There is no one rule for everyone. Human rights could be a base. But people understand them differently. Western ideas often shape these rights. They might forget cultures. These cultures care more about the group. Privacy rules are not the same everywhere. This causes problems for ethics. We must accept cultural differences. But we still need common AI ethics. Not being clear, the “black box problem,” causes issues. This is true in important areas. Not knowing who is responsible makes laws harder. It makes global rules tough.

Addressing Global Disparities

Differences in AI around the world need care. Rich countries get most AI benefits. Billions of people have no internet. They miss out on AI jobs. AI rules must help pay for internet. This gives everyone AI access. We need local AI development. Models trained on Western data can be unfair. This is true in other places. Africa has big limits. It has little AI money. Its internet is not good. Without help, many countries just use AI. The US AI plan has many layers. It updates rules. It uses diplomacy. This helps AI grow fast. It also fixes big problems.

Balancing Innovation and Responsibility

Microsoft is very important. It helps make rules for AI around the world. They have a good AI program. They have useful tools. They also influence policies. This shows their strong promise. Microsoft will keep leading. They will balance new AI ideas. They will also balance being responsible. This way builds trust. It builds trust in AI’s future. The company’s AI program is ethical. It makes sure AI is developed well. Microsoft is a key player. It helps create a trusted AI future. They work with others. Microsoft is dedicated to its AI program. This is very clear.

FAQ

What is Microsoft’s main way to handle AI rules?

Microsoft cares about responsible AI. It uses key ideas. These are fairness and reliability. They also include privacy. Inclusiveness is another. Transparency and accountability too. The company has programs inside. It has special groups. These help make AI in a good way. They help use AI in a good way.

What does the Aether Committee do?

The Aether Committee tells Microsoft leaders what to do. It talks about AI problems. It also talks about chances. It looks at AI projects. It checks for fair issues. The committee gives advice. It helps experts work together.

How does Microsoft help with world AI rules?

Microsoft speaks for world rules. It helps plans for safe AI. It also helps responsible AI. The company works with governments. It works with groups. It shares what it knows. This helps make AI rules everywhere.

What is the Responsible AI Toolbox?

The Responsible AI Toolbox has free tools. These tools help people who make AI. They check AI models. They make them better. They check for fairness. They check for being right. They check for being clear. The toolbox has things like error checks. It has fairness charts.